Recommendations to Support the Validity of Claims in NGSS Assessment (Part 1)

Designing Assessments to Support Claims

Once again this year, we are pleased to share posts on CenterLine by our summer interns and their mentors. These posts are based on the project they undertook and the assessment and accountability issues they addressed this summer. Sandy Student, from the University of Colorado Boulder, and Brian Gong get things started with a two-part series describing their work analyzing the validity arguments for states’ large-scale Next Generation Science Standards Assessments (NGSS).

Assessing the Next Generation Science Standards (NGSS) on a large scale poses many challenges. One of these challenges is the specification of claims about students that can be justified with the evidence from a summative assessment. This summer, I have worked with Brian Gong to develop interpretive validity arguments (Kane, 2006, pp. 17-64) for the summative science assessment practices and claims of two states, Washington and Delaware. Based on those analyses, we present our recommendations for states adopting the NGSS, and developing their own large-scale science assessments.

Our recommendations mirror our analysis methodology, which relies primarily upon publicly available documentation of a given state’s assessment: item specifications, test blueprints, achievement level descriptors (ALDs), and technical manuals. At each level, we provide recommendations that should be practical for states to implement, and that support the development of stronger evidence for student-level claims than is currently available.

Item-level: Use clustered items to the greatest possible extent

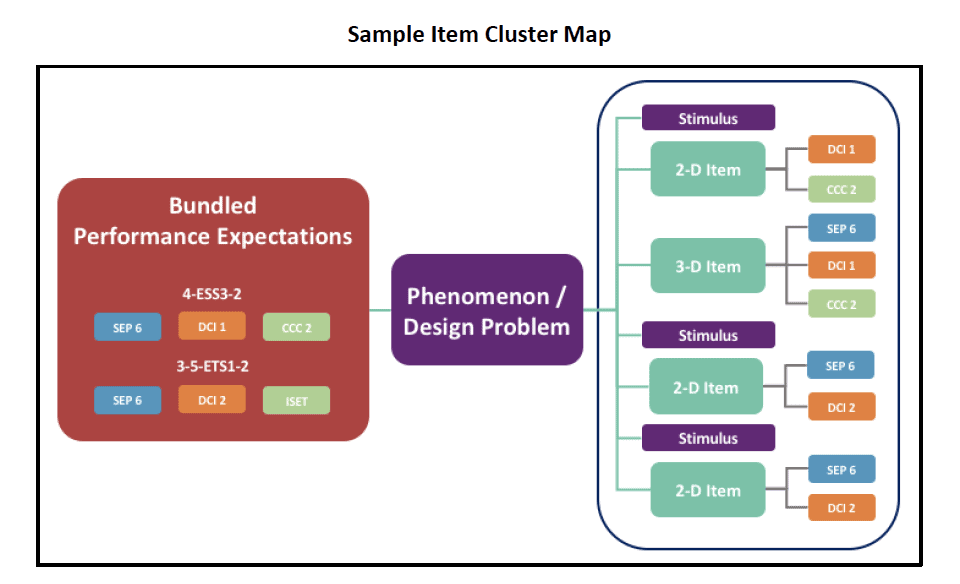

The NGSS are replete with standards that call for types of thinking that are difficult to capture in a standardized testing setting, such as the science and engineering practice (SEP) of modeling or the crosscutting concept (CCC) of cause and effect applied within a particular disciplinary core idea (DCI). To make claims about students consistent with these types of thinking, tests require items that can capture them. Item clusters approach this problem by asking students multiple questions that are related to a phenomenon. The clusters may be designed to allow students the opportunity to demonstrate increasingly complex thinking and various combinations of the DCI, SEP, and CCC.

Both Washington and Delaware use item clusters to try and elicit the complex thinking that the NGSS target (WestEd, 2019, 2020). Delaware’s integrative item clusters are especially promising with their narrative structure and requirement of an extended response item. Additionally, item clusters address the limitations of standalone items in the realm of construct representation. As implemented for Washington and Delaware, item clusters target two or three performance expectations (PEs) and more closely mirror the NGSS’s expectation that students be able to apply multiple DCIs/SEPs/CCCs to make sense of a specific phenomenon. “Crossed” PE designs, in which some of the items within a given cluster target DCI/SEP/CCC combinations that mix the dimensions of more than one PE, may also provide evidence of proximal transfer not available from standalone items.

These are, of course, challenging items to develop. However, test developers and states already recognize that standalone items do little to elicit the type of thinking that the NGSS targets. These items are typically included to achieve coverage of standards, but if they do not provide much information about the type of thinking they supposedly target, their actual value to the validity of claims made based on test scores is minimal. Test forms should leverage item clusters to the greatest extent possible. While coverage of broad standards can be achieved using matrix sampling, our recommendations below reduce the need to cover these comprehensive standards at all.

Test blueprint: Balance depth and breadth on test forms

As noted above, standalone items are not a strong source of evidence about what students know and can do with respect to the NGSS. They are typically included in the test blueprint to increase the breadth of the standards to which each test form aligns; however, the resulting evidence relative to these broad standards is not strong, either for content representation or the cognitive complexity in the claims. Because the NGSS strives for deeper learning of more complex content and skills, we recommend that test blueprints should emphasize depth. Strong evidence about a smaller “slice” of the standards is preferable to having broad coverage, as opposed to having little useful information that may misrepresent the claims and intentions of the NGSS. Issues of alignment should be addressed with matrix sampling within the year, sampling over multiple years or, ideally, by student claims that are narrow enough that evidence from large-scale tests can support them.

Large-scale assessments need to balance the depth of individual items with coverage of targeted standards. It is possible to create test forms that are very deep but insufficiently broad, which dooms the comparability of scores across forms. Prior studies in performance assessment, especially in science, have produced evidence of large task/occasion-specific variance (Brennan & Johnson, 2005; Cronbach et al., 1997; Gao et al., 1994; Solano-Flores & Shavelson, 2005). This means that although a single, very in-depth performance task may be the strongest source of evidence available relative to the specific NGSS dimensions it targets, the ability to compare student learning, in general, is highly compromised. This is typically a fundamental goal of standardized large-scale testing whose loss would be overly costly.

Align test blueprint to the claims you want to make

On tests that use traditional discipline-based reporting areas (i.e., subscores in life, physical, and earth/space science) one might be tempted to create a test blueprint that is based primarily on roughly equal distribution across reporting areas, as found in Washington’s current blueprint (Washington OSPI, 2019). However, this only makes sense if the claims that the state wants to make are also aligned primarily to these reporting areas.

Washington’s claims, as found in their achievement level descriptors (ALDs), are primarily oriented toward SEPs (Norris & Gong, 2019). One way to ameliorate issues of overly broad test blueprints requiring items that yield little evidence of student thinking is to reconsider the typical test blueprint in science. Dropping reporting areas from the test blueprint entirely, or adding a scheme to sample across SEPs systematically, would significantly strengthen such claims, as would a research agenda that establishes the generalizability of performance across SEPs for most students.

States may also want to make claims about transfer, as in the case of Delaware. Here, we emphasize two recommendations. First, be specific about the type of transfer the test targets, as “transfer” can mean many different things (Barnett & Ceci, 2002). Second, when item clusters are written to measure transfer, they must include various levels of scaffolding so that students at all levels have an opportunity to demonstrate what they know and can do. Specifying a distribution across some measure of support or cognitive complexity in the test blueprint ensures that this will be the case in operation. Further, this enables the formulation of claims about transfer that are based on these different levels of support.