Can a State Test Inform Instruction?

The Typical, the Improbable, and the Possibilities in the Messy Middle

When I’m participating in meetings with state leaders, district and school educators, parents, or other stakeholders who want their state test to inform instruction, I am reminded of the scene in the movie “The Princess Bride,” where Vizzini repeats the word “inconceivable” because the man in black is still climbing the cliff, even though they cut the rope. Inigo Montoya turns to Vizzini and says: “You keep using that word. I do not think it means what you think it means.”

In this post I want to say to them, “You keep using the term ‘inform instruction’ but I don’t think it means what you think it means.“

Rather than simply responding “inconceivable,” I’m hoping to clarify the ways in which ESSA-compliant state tests can and cannot actually be used to inform instruction.

I focus on how state assessments designed to maximize instructionally useful information (i.e., provide timely, fine-grained information in relation to the enacted curriculum; see Marion, 2019) will have corresponding tradeoffs related to testing efficiency and local control over curriculum. These are not the only two tradeoffs, but I emphasize them here for illustration purposes.

- If we want more fine-grained information from a state assessment system, then the test length and/or number of test administrations must increase; and

- If we want to align state assessment reports and score information with the enacted curriculum, then local curriculum adoption, curriculum scope and sequencing, and pacing must be more standardized across classrooms in the state.

Typical Instructional Actions Informed by State Tests

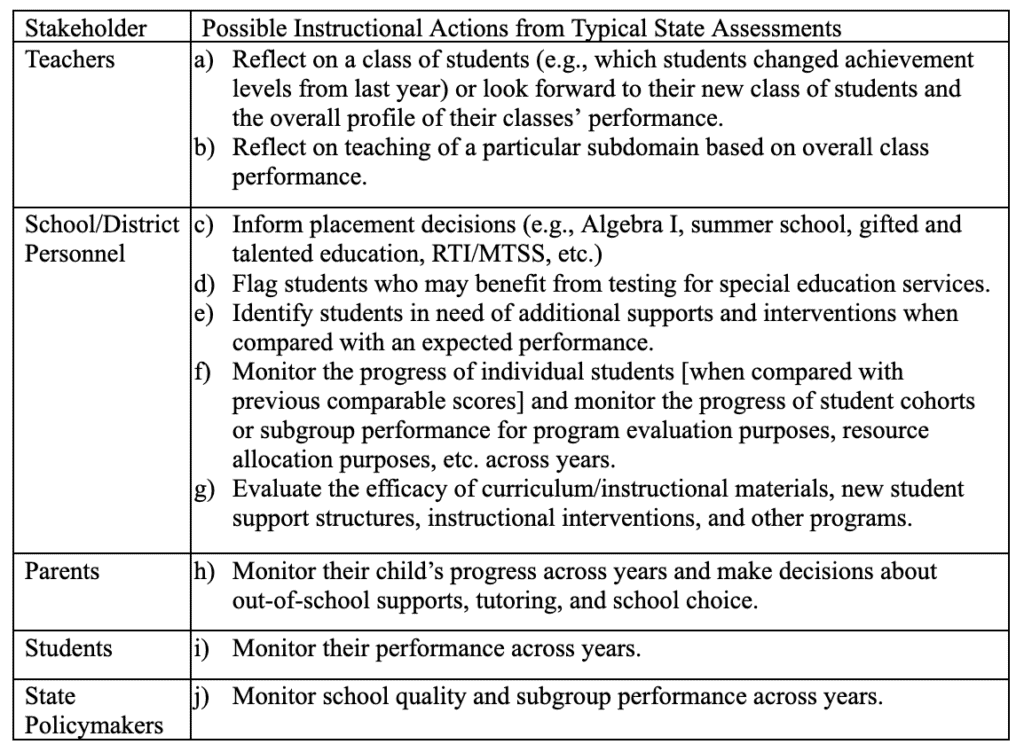

Most state tests and testing programs are designed with similar purposes and uses in mind. To meet the federal requirements that state test results be used in accountability systems to evaluate school quality, state tests sample from across the breadth and depth of content standards to produce a student achievement level and reporting category subscores. Informing instruction in this context refers to the broadest sense of the term “inform” and relates to what can be gleaned from comparable individual or aggregate achievement levels and subscores to inform future instruction.

For example, state tests right now provide assessment information that various stakeholders could use to make several determinations or inferences, as I detail below:

These identified instructional uses of state test information are consistent with the goal of maximizing efficiency of state testing because

a) they only require tests to be administered once at the end of the year,

b) computer-adaptive testing can be used to shorten test length for individual students, and

c) reliable estimates of overall achievement level can be produced with shorter tests than would be needed to provide more fine-grained information.

Typical instructional actions tied to state assessments can also maximize local control over curriculum adoption, pacing, scope and sequencing because there doesn’t need to be a tight coupling between what is taught at any point in the year with the state test. Instead, state content standards, eligible content, and test blueprints provide a framework that schools and districts can use to inform what is taught, when, and at what depth to maximize students’ opportunity to learn and demonstrate what they know and can do on the state test at the end of the year.

Instructional Actions Where the Utility of State Tests is Highly Improbable

To ask a state assessment to inform instruction in the way that the term is commonly used would, at best, have sizable implications on state assessment design and implementation as well as on curriculum and instruction, which is why I called this section “improbable”—though maybe I should have used the word inconceivable or even undesirable.

There are many daily instructional actions that classroom teachers make based on observations (formal and informal), discussions, student work products, and other real-time evidence of student learning. Teachers need timely, fine-grained, and actionable information that they can use to

a) monitor/adjust their instruction in relation to their curriculum/instructional materials;

b) inform the assembly of instructional groups for a particular lesson;

c) provide feedback to students on learning progress; and

d) identify where to intervene based on student misconceptions, strengths and learning needs.

Best practice in classroom assessment focuses on formative assessment processes where feedback from the teacher, peers, and the student themselves is used to help all students know the intended learning targets and success criteria, where they are at in relation to that end target, and how to get there.

Classroom summative assessments are used to gather evidence typically at the middle or end of an instructional unit of the extent to which students have met the intended learning targets aligned to the state content standards.

To inform instruction in that same manner, state testing would need to mimic the classroom assessment process described above. State assessments would need to be administered frequently (daily/weekly) just like typical classroom assessments are administered now, which minimizes testing efficiency.

Also, these frequently administered state assessments would need to be delivered in a secure format because of the high-stakes accountability use limiting their utility for informing instruction. Teachers wouldn’t see the items/questions ahead of time or likely even after testing (given the cost of developing secure items), nor would they see how students answered.

State assessments intended to supplant the purposes and uses of typical classroom assessments would also need to be curriculum agnostic because in most state contexts curriculum is a local decision. Given this situation, it seems inconceivable that the assessment results (e.g., % correct, achievement level, etc.) with no known connection to the enacted curriculum could inform instruction in the same ways that best practices in formative and summative classroom assessment can inform instruction (e.g., qualitative student work analysis).

The tradeoffs in this context are significant around testing efficiency and local control over curriculum.

Not Typical, But Possible Instructional Actions tied to State Assessment

There is a reason why there has been a proliferation of commercial interim assessment use in the last decades. Interims are often used to fill the gap in what I call the messy middle between the typical information gained from end-of-year state tests and the classroom assessment information teachers use and need to inform instruction on a daily basis. The messy middle is not typical, but often desired and possible to achieve if state assessments become through-year assessment systems and are redesigned to fulfill additional instructional purposes.

Dadey & Gong (2021) define through-year assessments as follows: (1) administered through multiple distinct administrations across a school year; and (2) meant to support both the production and use of a summative determination of student proficiency and one additional aim. Often that additional aim is instructional utility. Many commercial interim assessments currently used by districts and schools can be classified as a form of through-year assessments, although not necessarily aligned to state standards.

Through-year assessments can serve all the same purposes listed in the Table under typical state assessment instructional actions and add within-year progress monitoring, allowing stakeholders to

a) monitor the progress of individual students and/or identify students in need of additional supports and interventions within the school year; and

b) monitor the progress of student cohorts or subgroup performance for program evaluation purposes, resource allocation purposes, etc. within the school year.

While this assessment information doesn’t replace the need for classroom assessments, it does supply progress monitoring information that many school/district leaders want during the school year, if the amount of money and time spent on commercial interims is any indication.

Yet, with interim assessments, there are still challenges and tradeoffs in terms of efficiency and local control over curriculum that states and their stakeholders should carefully consider.

In terms of tradeoffs, testing efficiency in through-year assessment models depends on current practices in districts and schools. If current practice is 1 end-of-year state test + 3 district interims (fall, winter, spring), then moving to a through-year state assessment design with 1 end-of-year state test + 2 state interims (fall, winter) is more efficient. It is also likely a more coherent signal given standards and performance expectation alignment issues with commercial interims.

However, if a district or school continues to administer 3 commercial interims (or even district-created interims) in addition to the state through-year system, or the through-year design is more modular, then it will mean more testing and be less efficient. Efficiency and comprehensiveness are in tension.

Through-year designs also may require a tight coupling with local curricula, depending on the state’s theory of action. If the state moves to a through-year system because it believes that doing so will increase the quality, coherence, and efficiency of assessment information used by districts and schools to adjust programs, supports, interventions, and instruction (broadly defined) then the state has to make a decision about how tightly coupled the interims must be to the enacted curriculum. A full discussion of this issue is beyond the scope of this post; however, the more that is required throughout the year from the state assessment system, the less local control over curriculum adoption, pacing, scope and sequencing.

Conclusion

My goal in writing this post is to highlight the need to clarify and be very specific about what we mean when we say that we want the state test to “inform instruction.” Rather than claim that a state test can never inform instruction, I’ve tried to explain in what ways and for whom it is possible and typical for a state test and testing program to inform instruction.

I’ve also tried to delineate the classroom assessment space and discuss why it is inconceivable that state assessment could ever inform instruction in the ways normally understood by classroom educators.

I ended with a discussion of progress monitoring. Although progress monitoring is not a typical use of state assessment, it is certainly possible and seemingly desirable to add progress monitoring as a key design feature within state assessment systems with reasonable tradeoffs.

In closing, at least two questions remain in my mind regarding stakeholders’ desire for state assessment to inform instruction:

- Is progress monitoring really what stakeholders want when they say they want their state test to better inform instruction?

- Or, if what stakeholders really want is best served by classroom assessments, are stakeholders really asking for better state support of local assessment efforts and not more state testing when they say they want their state test to better inform instruction?