A 10-Step Guide for Incorporating Program Evaluation Into the Design of Educational Interventions

Proving What You Think You Know

In two recent CenterLine posts, my colleague Chris Brandt and I made a case for the importance of incorporating program evaluation in education into the design and implementation of any program intended to improve educational outcomes and have a positive impact on student learning. In this post, I offer a 10-step guide to designing a solid program evaluation from the beginning of a program. I also propose an abridged version of the guide that could be used to evaluate existing programs or if you are short on the resources (e.g., time, money, and personnel) to carry out the full 10-step program.

From Solving to Mastering the Rubik’s Cube

If you’ve read any of my previous posts, you know that I have to tie my message to some kind of analogy, metaphor, or (hopefully) related story drawn from my own everyday experiences or sometimes those of my son. I saw this post as an opportunity to offer a multi-generational parable to demonstrate the importance of having a well-planned guide to help you know that what you are doing is working and prove you know what you think you know.

Recently, my son has transitioned away from Fortnite (see post here) to mastering a Rubik’s Cube. Apparently, one does not simply try to solve a Rubik’s cube anymore.

When I first encountered a Rubik’s Cube in the 1980s, it was a colorful puzzle you tried to solve, most often unsuccessfully. There was a well-defined problem; get one solid color on each face of the cube, but no road map to guide you from the starting point to the desired outcome. For as long as the cube held your interest, you adopted a strategy like solving one face of the cube at a time or starting with the corners, but you never had any way of knowing whether you were closer to a solution. Eventually, you forgot about the problem you were trying to solve and started creating pretty patterns on the faces of the cube that friends and family found appealing. But you were no closer to the solution, as far as you knew, and eventually, the cube ended up unsolved on a shelf or as a paperweight on your desk.

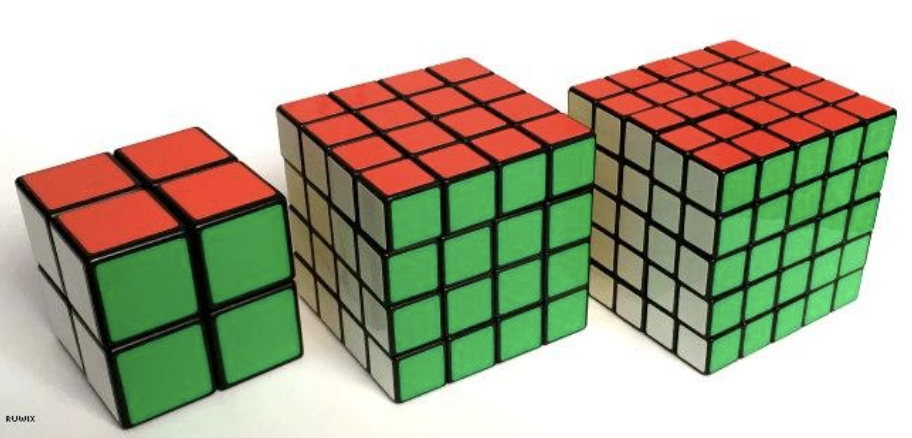

My son’s experience with the Rubik’s Cube, in contrast, began with training. How does one go about getting that training? Through YouTube, of course. There are an astounding number of how-to videos coaching you on how to solve a Rubik’s Cube using various move sets (aka, “algorithms”). These algorithms vary in complexity, skill, and when they should be applied. Some are very repetitive and can be applied to most cubes, while others are a mix-and-match set that requires greater skill and awareness but solves the cube much faster. He’s gotten pretty quick with the standard-sized cubes and now wants to try his hand at a 4×4.

Consider solving the Rubik’s Cube as the initial problem. That problem has been solved. Actually, it seems that every kid at the bus stop plays with Rubik’s cubes as fidget toys, and they all seem to be solving them better than I can (another great example of the availability of information on the web). My son would not have learned how to solve the cube if he hadn’t had access to how-to videos. And he wouldn’t have gotten better at solving it if he hadn’t measured his progress regularly against the solutions provided by those videos.

Now he can move on to the next problem, getting faster and more efficient at solving the cube and solving the cube under certain conditions, time bounds, and other constraints. Speed and efficiency (number of moves) are now the measures he applies as part of the evaluative process. Do you see where I am going?

My son’s example of solving and mastering a Rubik’s Cube highlights the need for a well-defined problem, a solution (i.e., how-to videos), and an evaluative process to determine efficacy both along the way and over time.

We have well-defined problems in education, and we even have some research-based solutions (e.g., embedding formative assessment processes, curriculum, and instruction based on the science of reading). What we often lack is the evaluative process to determine the efficacy of our attempts to implement those solutions in our context both along the way and over time.

Identifying the Problem and Planning for an Educational Program Evaluation

Before I describe the steps to conducting a high-quality evaluation, recall that it is important to first know what we’re even trying to evaluate (e.g., solving a Rubik’s Cube, completing an obstacle course race, or improving student learning). My colleague Chris Brandt described in a recent post the importance of applying the principles of improvement science with a focus on identifying and defining the problem to be solved. He also noted that once you have identified the problem and its root causes, we should apply an improvement framework to answer the following three core questions (Langley, et al, 2009):

- What is our goal? What are we trying to accomplish?

- What change might we introduce and why?

- How will we know that a change is actually an improvement?

At the end of my recent post (after comparing educational program evaluation to obstacle course racing), I suggested the need to describe the steps in planning a well-designed evaluation based on a solid program theory and logic model. As I mentioned, program evaluation is a systematic method for collecting, analyzing, and using the information to answer questions like question 3: How will we know that a change is actually an improvement?

Clearly defining the theory of action and program logic are two of the most critical steps in developing an evaluation plan. The more detailed a logic model, the clearer the evidence for defining program success.

As Chris explained in his post and I demonstrated in a previous post about my experience building a patio, it is essential to ensure the proper evidence is aligned with the program’s resources, outputs, short-, mid-, and long-term outcomes. A robust evaluation is based on a solid plan and data elements, or indicators of proof defined a priori. These indicators inform what evidence should be collected, documented, and used to make interpretations.

The logic model identifies the components that are important to be aware of when connecting the program to an evaluation. Think of it more as an organizer for your program that can be used to inform the evaluation. It makes creating the evaluation much more efficient in the same way that organizing puzzle pieces by edges and center pieces makes for an easier puzzle-making experience.

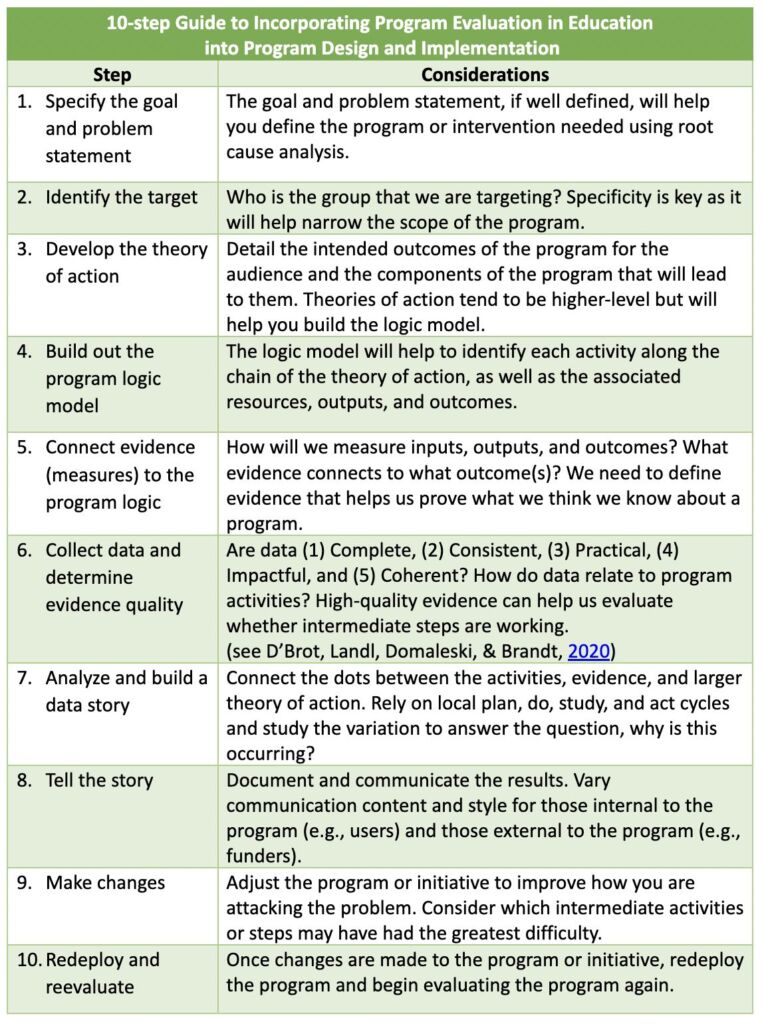

Once you have organized your program, you can use the following 10 steps to conduct your own evaluation. They are presented in the table below.

When Good Design Meets Real-World Constraints

I’ve been involved in a lot of educational program evaluations, and not all of them had the budget, personnel, and time to include all of the steps in the table above. Many times, particularly when working with states and districts, evaluations are added to the existing program rather than embedded from the beginning of the design. When that is the case, we need to narrow our scope and focus our limited resources. If I were trying to identify the bare-bones number of steps to evaluate a program, I would suggest focusing on the following three:

- Build out the program logic model. Theories of action are important, and even if not documented, many program designers and implementers have an implicit theory of action. A logic model forces us to be more specific about the actual activities that are intended to bring about change, the resources that are necessary, the parties responsible, and the outputs that will lead to certain intended outcomes.

- Connect evidence (measures) to each activity in the logic model. This step translates the outputs into measurable data elements. In some cases, evidence may be direct observations of counts, completed tasks, or getting individuals to attend some training. In other cases, the evidence is a little more difficult to capture, like surveys, interviews, or document reviews. The coherent connection between the evidence (i.e., data elements) and activities is most important to be able to make judgments during the next step.

- Collect data and determine evidence quality. The collection of high-quality evidence that speaks to whether you are making incremental progress along the way is critical. This evidence helps justify that the intermediate activities along the way will positively impact intended outcomes. Even if the evidence is not analyzed across all activities, evaluating the intermediate steps can help us identify where we may need to go back and redeploy a step in the process to ensure that the program doesn’t break down.

Ideally, we would want to engage in all 10 steps, but that’s often not practical or reasonable. I offer these as the three most critical steps I believe can help program designers and implementers monitor progress along the way rather than relying on waiting for changes in the outcome.

Without a solid evaluation plan, we increase the risk that we are duplicating efforts, applying the wrong program, or simply wasting resources. By monitoring progress as we go, rather than just in retrospect, we are better equipped to identify what activities need to be corrected or correct activities along the way.