New NAEP Scores Don’t Support Political Arguments

Looking Beyond the Headlines to Understand the Significance of the “Nation’s Report Card”

The 2022 Main NAEP scores, released today, portray the devastating effects of COVID-related disruptions on student achievement across the United States. These new results put policymakers on a dangerous precipice: They could misinterpret the stories these and other test results are telling, leading to the wrong decisions for kids.

You’ve probably heard the topline results already: Reading scores declined at both 4th and 8th grade, back to the score levels last seen in the early 2000s. Math results were worse, with the largest score declines since the inception of the assessments in 1990. Results varied across states, but while some states held their ground in certain grades and subjects, no state was unscathed across both 4th and 8th grades in reading, and especially in mathematics. Critically, COVID’s impact was more severe for the students, particularly in 4th grade, who could least afford to lose ground.

This NAEP is the most important administration in the program’s history because it provides the first nationally comparative pre-post-pandemic examination of student achievement. The results carry a clear message for state policymakers: They need to step up in a big way before we lose a generation of students. But first, state and district leaders need to accurately interpret the NAEP results, and also consider the results from other tests.

Look Beyond the Headline Numbers

Now that the second set of NAEP results has been released, we’re awash in rich, but potentially confusing, data. If we don’t interrogate the multiple sources of assessment information honestly, states and districts could miss opportunities to intervene on behalf of students.

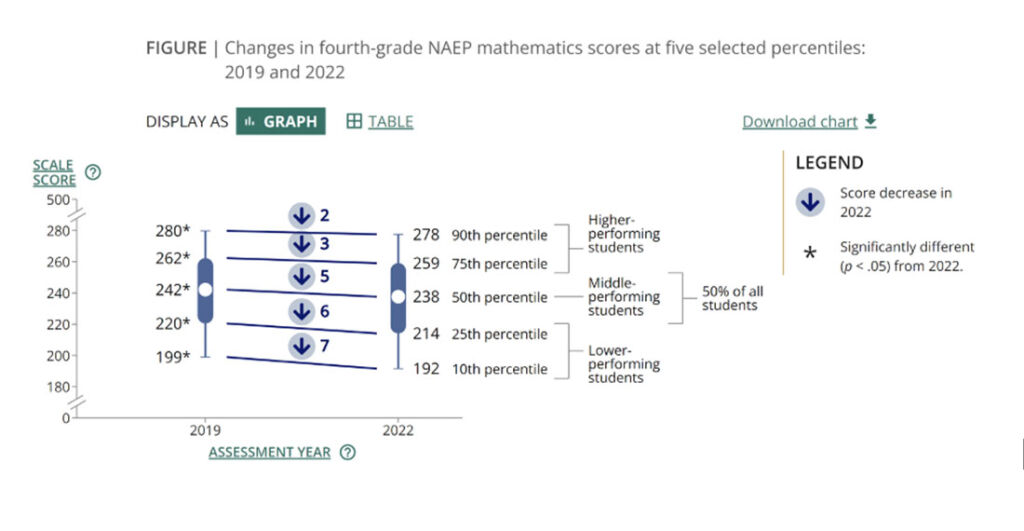

Many people will be tempted to look at the overall NAEP scores for their states to see how they compare to other states. I understand this temptation. But in order to understand where needs are greatest, leaders must examine the extensive set of disaggregated results across all four tests: math and reading, 4th and 8th grades. Here’s an example that shows why this kind of analysis is important. The National Center for Education Statistics (NCES), the agency responsible for NAEP, and the National Assessment Governing Board (NAGB), the group that sets many of the policies for NAEP, have both been focused on the growing achievement gap between the lowest and highest performers. As you can see in the graph below, the 4th-grade math scores for the lowest-performing students (10th percentile) dropped 7 points, while the highest-performing students (90th percentile) declined only 2 points.

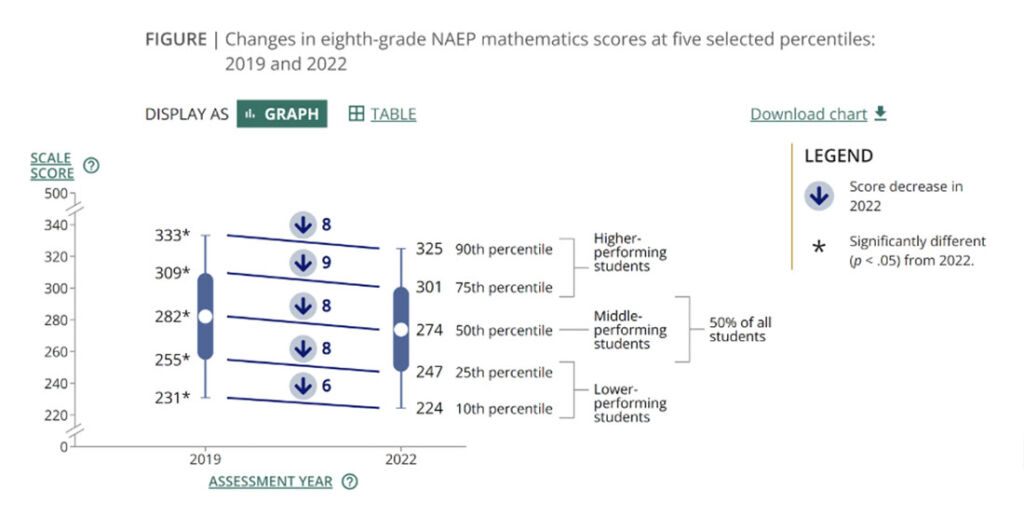

One could reasonably conclude that the pandemic hit the lowest-performing students the hardest, and this conclusion would be supported by these results. But take a look at the 8th-grade math scores to see why I’ve been urging people to look across all four tests before drawing general conclusions.

In 8th grade math—the grade/content area that was probably hit hardest by COVID—the drops in performance were quite consistent across the full achievement continuum.

Why are the differing patterns between 4th– and 8th-grade math worth noticing? Because information like this can add to a nuanced analysis of performance that – along with state summative and interim test results – can inform a thoughtful response from state and district leaders. Such analyses will allow educators and policymakers to provide support where it’s needed most.

Be Skeptical of Rosy Interpretations of NAEP Scores

State leaders and their assessment experts now have to reconcile this new NAEP data with their own summative state results. Most state assessment results tell a story similar to NAEP’s, but some states are reporting that students are essentially back to pre-pandemic levels. Unless such positive results are confirmed by NAEP, I’d urge leaders not to believe such a rosy picture.

I admit I’m a cynic, but that’s not what’s driving me here. It’s just common sense. If students’ learning was interrupted to the degree most test data and extensive opportunity-learn-data suggest, it makes no sense to think students could have returned to their prior levels of performance in just a couple of years. Even in states where most schools were open for in-person learning during 2020-2021, education was still far from normal.

When state assessments serve up a rosy picture of COVID impact or student recovery, NAEP can provide a crucial reality check. Here’s why.

It’s the only test in the country that can provide valid comparisons across states and large urban districts. State summative tests can’t do that. Even the national NAEP Long-Term Trend results released on September 1 can’t do that. Additionally, NCES is able to ensure the validity of NAEP results in ways that state and interim assessments cannot. This validity is due largely to rigorous sampling protocols that ensure that the pools of students participating in the 2019 and 2022 tests were as comparable as possible: an “apples to apples” comparison. NCES also has the time, resources, and expertise to ensure that the inferences that can be drawn from the 2022 test scores are equivalent to those from the 2019 scores. They do this through a set of processes known as test equating.

All of these strengths position NAEP as a powerful basis for understanding patterns of student learning. That said, there is always a degree of uncertainty in any test results. No test – including NAEP – portrays “the truth.” And I say that even as a proud member of NAEP’s Governing Board. It’s just good, basic psychometrics.

It would be unusual for any state’s results to be a perfect reflection of NAEP. There are many reasons to explain these differences.

First, NAEP and state tests don’t measure exactly the same math and reading content. The differences aren’t usually substantial, but any difference can lead to differences in test results.

Second, the NAEP achievement levels – the cutoff points for each level of performance – are different than those for states’ own end-of-year assessments, making it impossible to compare the percentage of students scoring at basic, proficient, and other levels across state and NAEP tests. It’s intuitively appealing, I know, to think you could compare the difference between the percentages of students who scored proficient or higher on NAEP tests in 2019 and in 2022 with similar percentages on state tests. But that wouldn’t be a valid comparison.

State leaders should evaluate whether the change in their NAEP scale scores from 2019 to 2022 is statistically significant (that data is reported by NCES), and how that change compares to those in other states. States should then examine whether what they’re seeing on NAEP, across all four tests, aligns with the score changes they see on the state assessment.

If there are noticeable differences, leaders should avoid the temptation to cherry-pick the results that tell the most positive story. Instead, they should see those differences as a sign that they need to examine the quality of their state assessment, particularly the efficacy with which the scores were linked across years. This might necessitate potentially difficult conversations with their assessment technical advisors and test providers.

We must provide support for the long haul

These tough conversations about assessment results carry serious policy implications. That’s why it’s so important to walk through the process with integrity. Michael Petrilli of the Thomas B. Fordham Institute wrote last week about the possibility that NAEP results could influence the upcoming election. That potential worries me, too.

I urge leaders to resist making political points based on the NAEP results. Contrary to what might some try to argue, the results do not support arguments about how school reopening policies affected scores. The patterns in the test results are too nuanced and varied to support that conclusion. That does not mean some politicians won’t try to make the argument anyway, but they will be wrong. No state or district escaped the effects of the pandemic disruptions, a fact that doesn’t square with politically driven arguments about school policy.

As I wrote last week, pundits and others must avoid “just so” stories used to score political points. Rather, we need to look carefully at the rich set of data offered by this NAEP release, along with other assessment and opportunity-to-learn learn data, to understand student and school needs.

And understanding must lead to action! The federal ESSER funds provided much-needed – but short-term – emergency relief. Remember: These funds must be spent by September 2024, but the need will continue well beyond this time. State political and policy leaders must continue the funding necessary to enable students to make up lost ground. State departments of education and other partners must provide clear guidance and leadership on evidence-based practices to support accelerated learning.