Why Has it Been So Difficult to Develop a Viable Through Year Assessment System?

A Through Year Assessment System Cannot Both Portray Summative Student Performance and Purport to be Used to Improve Student Performance in the Short-Term

There has been a buzz that “through year” or “through course” assessment represents a better way for states to assess than today’s pervasive end of the year summative assessment. However, no through year assessment system has yet been implemented statewide with results acknowledged as acceptable for use comparable to end of year summative assessment scores.

Why Has it Been so Difficult to Get a Viable Through Year Assessment System?

There have certainly been practical, political, and conceptual challenges to through year assessment, which I summarize below. More importantly, in this post I show how there are logical challenges to validity arguments for through year assessment systems’ use, both for summative purposes and for combined summative/instructional purposes.

Definition of a Through Year Assessment System

While there are variations in the details, a common definition of a through year assessment is:

An assessment program that is administered in multiple parts at different times during the year, e.g., at the end of each quarterly marking period.

I discuss three main possible use cases for a through year assessment:

- Summative: to support a summative appraisal of student achievement similar to what would be provided by an end of year assessment

- Instructional: to inform instructional actions during the year

- Both Summative and Instructional: to support summative uses and provide instructional information

Through Year Assessment: Summative Interpretation and Use

A through year assessment intended to be used for summative purposes typically has evidence gathered through the year to supplement or supplant the end of the year assessment.

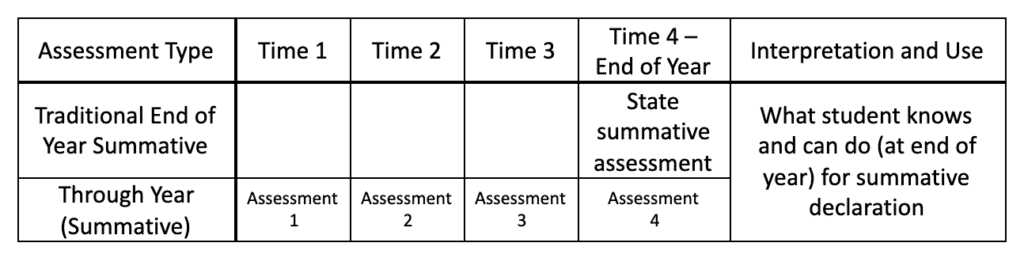

Figure 1: Comparison of assessment structures for summative end of year and summative through year assessments

To replace the end of year summative assessment, a through year assessment must show that the evidence gathered through the year—and the associated score and intended interpretations—are at least as good as, if not better than, the evidence gathered at the end of the year about what the student knows and can do (at the end of the year). Additionally, the through year model must not be prohibitively burdensome or expensive to implement.

Common arguments in support of through year assessments include: richer assessments are possible (e.g., performance tasks), and assessments may take advantage of closer connections to curricula (e.g., knowing that students have read a particular novel). In addition, if a student is taking a district quarterly interim assessment and a state end of year assessment, it would be more efficient to use the through year assessment results instead of the end of year assessment, if possible. See more here.

Some practical challenges to using through year (or interim) assessments in lieu of an end of year summative assessment include segmenting the content in a way that fits local curricula across the state (i.e., local choices about scope and sequence are often dissimilar), deciding on how to aggregate the information, ensuring security, and getting agreement that the state may have access to data if the through year assessments draw on interim or classroom assessments. See more here.

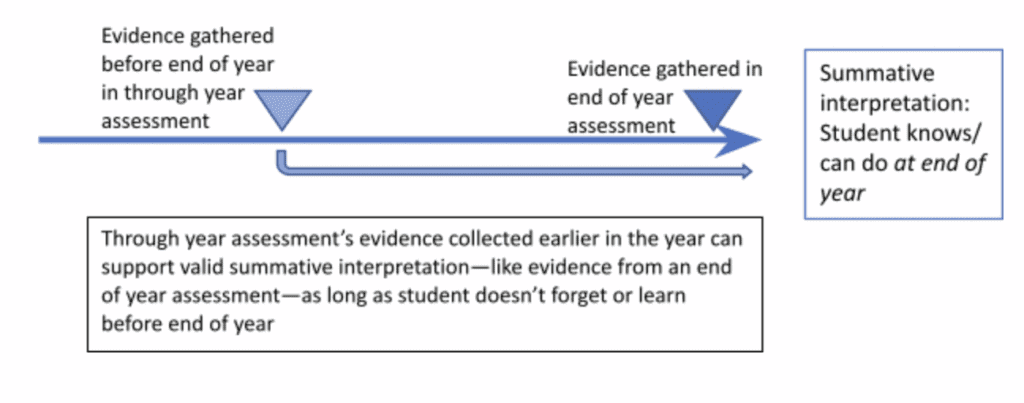

A typical end of year assessment is designed to provide evidence to support the interpretation that “this student knows/can do X [at the end of the year].” The evidence gathered at the end of the year directly supports the claim. A through year design would provide acceptable evidence to support the claim as long as the student does not forget or otherwise change between when assessed and the end of the year. This condition represents a key validity challenge for a through year assessment system.

Establishing the validity of an inference or use of a through year assessment, then, involves showing that the student did not change significantly between when the evidence was gathered as the student progressed through the year, and the end of the year. This concern is primarily about whether the evidence gathered earlier is still relevant at the time specified in the intended interpretation and use.

Figure 2: Condition where through year assessment can support summative use

Through Year Assessment: Instructional Interpretation and Use

To inform instruction, assessments can take place at any time before that instruction—from a microsecond to weeks or months. The timing and the content can vary in many ways, depending on the particular focus, as discussed here. A typical through year design for instructional use is shown in Figure 3, with assessments occurring quarterly or at the end of major instructional units. The defining characteristic is that such assessment is intended to be used to help the student learn within the year. When through year assessments are used for instructional purposes, there is an explicit expectation that what the student knows and can do at the time of the assessment will change before the end of the year and the end of the year assessment.

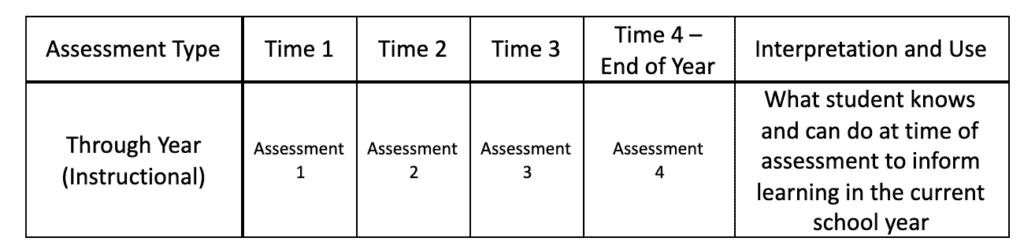

Figure 3: Example through year instructional assessment designs

Through Year Assessment: Summative and Instructional?

Many times people ask, “Couldn’t a through year assessment serve both summative and instructional purposes?”

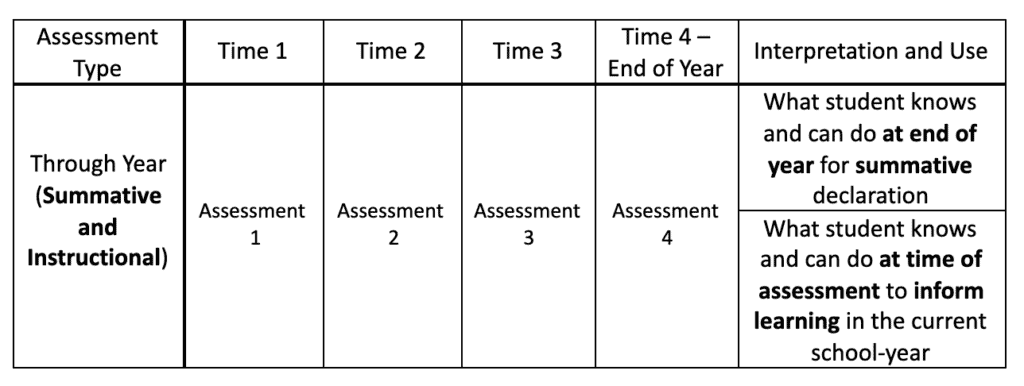

Combining the information from Figures 1 and 3 allows a close examination of how the intended interpretations and uses differ that can help us answer the question.

Figure 4: Proposed design for a through year assessment that serves summative and instructional purposes with the same assessments

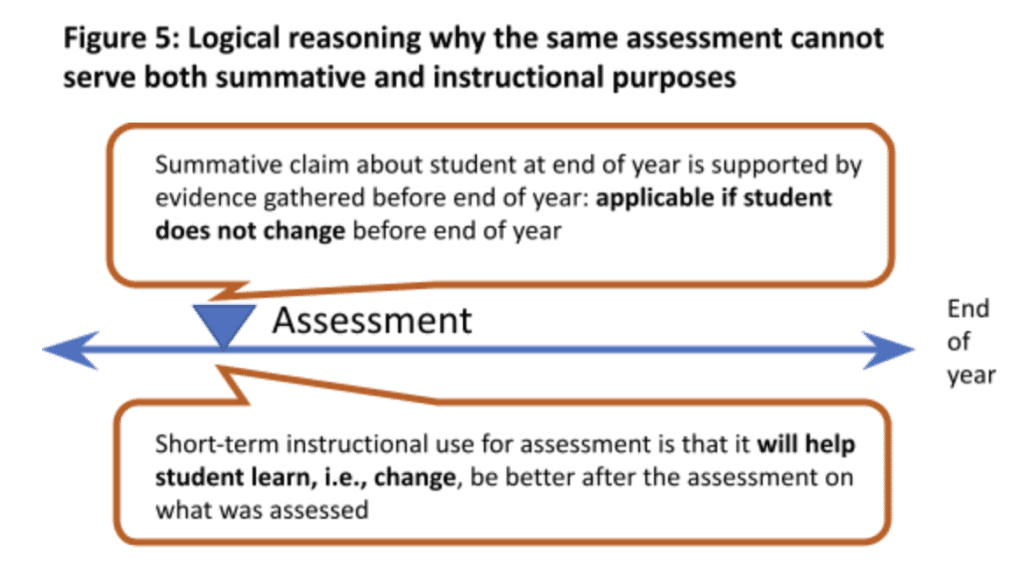

My conclusion is “No.” It is not logically possible for the same assessments—through year or otherwise—to both provide valid summative information like an end of year summative assessment, and provide information useful to help students learn during the year.

My reasoning is as follows:

- An end-of-year summative use of an assessment informs a claim about what the student could do at the end of the year. As discussed earlier, a through year assessment can provide evidence suitable for that end-of-year interpretation as long as the student does not change before the end of the year.

- An instructional use of an assessment is intended to help the student learn, get better—or change–before the end of the year. Effective instructional assessment disrupts a summative claim based on that assessment, or any assessment information gathered before the intended learning.

- In other words, these two uses of the same assessment—such as found in through year assessments—are logically opposed to each other: we cannot say the same assessment supports valid claims about the student based on the evidence gathered by the assessment, and also say the assessment effectively helps the student learn, as long as the claim is meant to be true at a time after the assessment and assumed learning.

This reasoning can be summarized in a general statement that effective instructional uses of an assessment should reduce the “predictive validity” of that assessment with subsequent performance. Note that the format of the assessment does not matter: multiple-choice, rich performance tasks, etc. It is a matter of purpose. This reasoning is also an extension of the example of forgetting given above: evidence gathered at the time of the through year can support valid interpretations at a later time so long as the student does not change between when the evidence is gathered and when the claim takes effect.

Conclusion

A single through year assessment might be used either for summative or instructional purposes, but should not be used for both, due to logical as well as practical barriers. Focusing on one purpose and use will make it easier to solve technical and operational challenges.

Of course, if the claim or assumptions are changed, then it is possible to have assessments that serve both instructional and summative purposes—to a degree. I will discuss this possibility in another post about how a through year design is a very natural design for a balanced assessment system, and for a “grading” approach to a summative design.